data breach

What is a data breach?

A data breach is a cyber attack in which sensitive, confidential or otherwise protected data has been accessed or disclosed in an unauthorized fashion. Data breaches can occur in any size organization, from small businesses to major corporations. They may involve personal health information (PHI), personally identifiable information (PII), trade secrets or other confidential information.

Common data breach exposures include personal information, such as credit card numbers, Social Security numbers, driver's license numbers and healthcare histories, as well as corporate information, such as customer lists and source code.

If anyone who isn't authorized to do so views personal data, or steals it entirely, the organization charged with protecting that information is said to have suffered a data breach.

If a data breach results in identity theft or a violation of government or industry compliance mandates, the offending organization can face fines, litigation, reputation loss and even loss of the right to operate the business.

This article is part of

What is data security? The ultimate guide

14 ways a data breach can happen

While the types of data breaches are quite varied, they can almost always be attributed to a vulnerability or gap in a security posture that cybercriminals use to gain access to the organization's systems or protocols. When this happens, the financial risk of data loss can be devastating. According to the 2021 Federal Bureau of Investigation "Internet Crime Report," organizations lost $6.9 billion in 2021 due to cybercrime across the globe. Much of this loss is due to data breaches.

Looking at the current cyber landscape, potential causes for a data breach can include the following:

- Accidental data leak or exposure. Configuration mistakes or lapses in judgement with data can create opportunities for cybercriminals.

- Data on the move. Unencrypted data can be intercepted while moving within a corporate local area network, in a wide area network or in transit to one or more clouds. Uniform cloud security and end-to-end data encryption are two ways organizations can bolster their protection for data on the move.

- Malware, ransomware or Structured Query Language (SQL) Gaining access to systems or applications opens the door to malware and malware-related activities, such as SQL injection.

- Phishing. While phishing often uses malware to steal data, it can also use other methods to gather information that can be used to gain access to data.

- Distributed denial of service (DDoS). Threat actors can use a DDoS attack as a way to distract security administrators so they can gain access to data using alternative methods. Additionally, modifications by the business to mitigate an attack can lead to misconfigurations that create new data theft opportunities.

- Recording keystrokes. This form of malicious software records every keystroke entered into a computing device and uses it to steal usernames and passwords from which data can be accessed.

- Password guessing. When unlimited password attempts are allowed or simple passwords accepted, password cracking tools can be used to gain access to systems and data. To help users manage complex passwords, password manager tools are one way to help keep passwords organized and centrally secured.

- Physical security breach. Gaining access to a physical location or network where sensitive data is stored can cause serious loss or damage to an enterprise.

- Card skimmer and point-of-sale intrusion. A user-focused threat reads credit or debit card information that can later be used to infiltrate or bypass security measures.

- Lost or stolen hardware. Hardware that's left unattended or insecure provides an easy and low-tech way to steal data.

- Social engineering. Cybercriminals manipulate humans to gain unauthorized access to systems or processes they're in possession of. These threats tend to focus on communication and collaboration tools and, more recently, identity theft on social media

- Lack of access controls. Access controls that are either missing or outdated are an obvious entry point that can lead to a breach of one system with the additional threat of lateral movement. One example of a lack of access controls is not implementing multifactor authentication (MFA) on all systems and applications.

- Backdoor. Any undocumented method of gaining access -- either intentional or unintentional -- is an obvious security risk that often leads to data loss.

- Insider threat. Numerous cybersecurity incidents come from internal users who already have access to or knowledge of networks and systems. This is why monitoring user actions is so critical.

Data breach regulations

A number of industry guidelines and government compliance regulations mandate strict controls of sensitive information and personal data to avoid data breaches.

For financial institutions and any business that handles financial information, the Payment Card Industry Data Security Standard, or PCI DSS, dictates who may handle and use personal details or PII. Examples of PII include financial information, like bank account numbers, credit card numbers and contact information, like names, addresses and phone numbers.

Within the healthcare industry, the Health Insurance Portability and Accountability Act (HIPAA) regulates who may see and use PHI, such as a patient's name, date of birth, Social Security number and healthcare treatments. HIPAA also regulates penalties for unauthorized access.

There are no specific regulations governing the protection of intellectual property. However, the consequences of that type of data being breached can lead to significant legal disputes and regulatory compliance issues.

Data breach notification laws

To date, all 50 states, the District of Columbia, Guam, Puerto Rico and the U.S. Virgin Islands have data breach notification laws that require both private and public entities to notify individuals, whether customers, consumers or users, of breaches involving PII. The deadline to notify individuals affected by breaches can vary from state to state.

On March 15, 2022, President Joe Biden signed into law data breach reporting legislation. The Cyber Incident Reporting for Critical Infrastructure Act of 2022 requires organizations in certain critical infrastructure sectors to report cybersecurity incidents to the Department of Homeland Security within 72 hours of the cyber incident.

The European Union's (EU) General Data Protection Regulation (GDPR), which went into effect in June 2018, also requires organizations to notify the authorities of a breach within 72 hours. GDPR not only applies to organizations located within the EU, but also applies to organizations located outside of the EU if they offer goods or services to, or monitor the behavior of, EU data subjects.

In May 2019, the Data Breach Prevention and Compensation Act was passed in the U.S. It created an Office of Cybersecurity at the Federal Trade Commission for supervision of data security at consumer reporting agencies. It also established standards for effective cybersecurity at consumer reporting agencies, like Equifax, and imposed penalties on credit monitoring and credit reporting agencies for breaches that put customer data at risk.

How to prevent data breaches

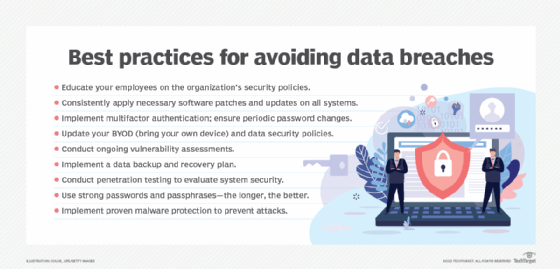

There's no one security tool or control that can prevent data breaches entirely. The most reasonable means for preventing data breaches involve commonsense security practices. These include well-known security basics, such as the following:

- Educate your employees on the organization's security best practices.

- Conduct ongoing vulnerability assessments.

- Implement a data backup and recovery plan.

- Update your organization's bring your own device, or BYOD, and data security policies.

- Conduct penetration testing.

- Implement proven malware protection.

- Use strong passwords and passphrases.

- Implement MFA, and ensure periodic password changes.

- Consistently apply the necessary software patches and updates on all systems.

While these steps help prevent intrusions into an environment, information security experts also encourage encrypting sensitive data, whether on premises or in the cloud, along with ensuring data is encrypted at rest, in use and in motion. In the event of a successful intrusion into the environment, encryption prevents threat actors from accessing the actual data.

Additional measures for preventing breaches and minimizing their impact include well-written security policies for employees and ongoing security awareness training to promote those policies and educate staff. Such policies may include concepts such as the principle of least privilege, which gives employees the bare minimum of permissions and administrative rights to perform their duties.

In addition, organizations should have an incident response plan that can be implemented in the event of an intrusion or breach. This plan typically includes a formal process for identifying, containing and quantifying a security incident.

How to recover from a data breach

When a data breach is first identified, time is of the essence so that data can potentially be restored and further breaches limited. The following steps can be used as a guide when responding to a breach:

- Identify and segregate systems or networks that have been affected. The use of cybersecurity tools can help organizations determine the extent of a data breach and to isolate those systems or networks from the rest of the corporate infrastructure. These tools also help ensure that bad actors can no longer attempt to move laterally within a network, potentially exposing more data.

- Perform a formal risk assessment of the situation. In this step, it's necessary to identify any secondary risks for users or systems that could still be in play. Examples include compromised user or system accounts or compromised backdoors. Forensic tools and forensic experts can collect and analyze systems and software to pinpoint exactly what happened.

- Restore systems and patch vulnerabilities. Using clean backups or brand-new hardware or software, this step rebuilds and restores affected systems as best as possible. This step also includes security fixes or workarounds to remediate any security flaws that may have been detected during the post-breach risk assessment step.

- Notify affected parties. Once systems and software are back online, the final step is to notify all relevant parties of the data breach and what it means to them from a data theft standpoint. This list varies depending on the data in question. However, it often includes the following:

- legal departments;

- employees, customers and partners;

- credit card companies and financial institutions; and

- cyber risk insurance company.

- Document lessons learned. Information and knowledge gained from the breach should be thoroughly documented to preserve the incident in writing for future reference and to help those involved understand what mistakes were made so they are less likely to occur in the future.

Notable data breaches

Most confirmed data breaches occur in the finance industry, followed by information services, manufacturing and education, according to the Verizon 2022 "Data Breach Investigations Report." There have been many major data breaches at both large enterprises and government agencies in recent years.

Colonial Pipeline

In May 2021, Colonial Pipeline, a major oil pipeline operator in the U.S., succumbed to a ransomware attack that affected automated operational technologies that were used to manage oil flow. This incident affected more than a dozen states on the East Coast and took several months to fully restore -- even despite the fact that the company paid the ransom to restore critical data and software that was stolen and rendered unusable.

Microsoft

In March 2021, Microsoft announced it fell victim to a massive cyber attack that affected 60,000 companies worldwide. In this case, hackers took advantage of several zero-day vulnerabilities within Microsoft Exchange. Those who were using the compromised email servers had their emails exposed, and malware and backdoors were installed by hackers so they could further penetrate unknowing businesses and governments.

SolarWinds

In 2020, SolarWinds was the target of a cybersecurity attack in which hackers used a supply chain attack to deploy malicious code into its widely adopted Orion IT monitoring and management software. The breach left the networks, systems and data of many SolarWinds government and enterprise customers compromised.

Information security company FireEye discovered and publicized the attack. While questions remain, U.S. cybersecurity officials claim that Russian intelligence services spearheaded the attack. The extent of the data exposed and the purpose of the breach are still unknown, but the focus on government agencies points to cyberespionage as the likely purpose.

Sony Pictures

In late 2014, Sony Pictures Entertainment's corporate network was shut down when threat actors executed malware that disabled workstations and servers. A hacker group known as Guardians of Peace claimed responsibility for the data breach; the group leaked unreleased films that had been stolen from Sony's network, as well as confidential emails from company executives.

Guardians of Peace was believed to have ties to North Korea, and cybersecurity experts and the U.S. government later attributed the data breach to the North Korean government.

During the breach, the hacker group issued threats related to Sony's 2014 comedy, The Interview, prompting the company to cancel its release in movie theaters. The film featured the assassination of a fictional version of North Korean leader Kim Jong-un.

Target

In 2013, retailer Target Corp. disclosed it had suffered a major data breach that exposed customer names and credit card information. The Target data breach affected 110 million customers and led to several lawsuits from customers, state governments and credit card companies. All told, the company paid tens of millions of dollars in legal settlements.

Yahoo

Yahoo suffered a massive data breach in 2013, though the company didn't discover the incident until 2016 when it began investigating a separate security incident.

Initially, Yahoo announced that more than 1 billion email accounts were affected in the breach. Exposed user data included names, contact information and dates of birth, as well as hashed passwords and some encrypted or unencrypted security questions and answers. Following a full investigation into the 2013 data breach, Yahoo disclosed that the incident affected all of the company's 3 billion email accounts.

Yahoo also discovered a second major breach that occurred in 2014 affecting 500 million email accounts. The company found that threat actors had gained access to its corporate network and minted authentication cookies that enabled them to access email accounts without passwords.

Following a criminal investigation into the 2014 breach, the U.S. Department of Justice indicted four men, including two Russian Federal Security Service agents, in connection with the hack.

Technology innovation has yet to thwart sophisticated criminals who continue to use new technologies to steal valuable information that can be bought and sold on the dark web. To combat this, organizations must implement strong security controls and automated monitoring software that can continuously scan and identify potential threats.

Prepare your organization for a possible breach by downloading the free guide at "Data breach response: How to plan and recover."