adam121 - Fotolia

Not just politics: Disinformation campaigns hit enterprises, too

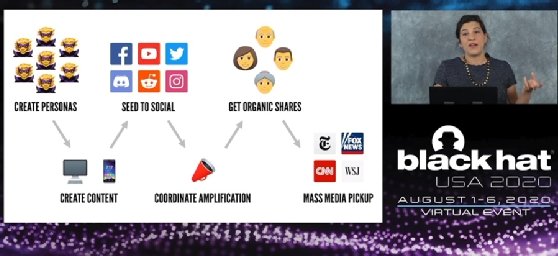

In her Black Hat USA 2020 keynote, Renée DiResta of the Stanford Internet Observatory explains how nation-state hackers have launched 'reputational attacks' against enterprises.

When it comes to nation-state disinformation campaigns on social media, U.S. elections and political candidates aren't the only targets.

Renée DiResta, research manager at the Stanford Internet Observatory, has been studying how disinformation and propaganda spread across social media platforms, as well as mainstream news cycles to create "malign narratives." In her keynote address for Black Hat USA 2020, titled "Hacking Public Opinion," she warned that enterprises are also at risk of nation-state disinformation campaigns.

"While I've focused on state actors hacking public opinions on political topics, the threat is actually broader," she said. "Reputational attacks on companies are just as easy to execute."

DiResta began her keynote by describing how advanced persistent threat (APT) groups from Russia and China have waged successful disinformation campaigns to cause disruption around elections and geopolitical issues. These campaigns usually use one of four primary goals: distract the public from an issue or news story that has a negative impact on the nation-state; persuade the public to adopt the nation-state's view of an issue; entrench public opinion deeper on a particular issue; and divide the public by amplifying dissenting opinions on both sides of an issue.

DiResta said these campaigns often create online personas and groups and even fake sources for news media to create content or malign narratives and engage in coordinated seeding and amplification on social media. Examples of such campaigns include the notorious Russian troll farm known as the Internet Research Agency, which aimed to sway public opinion around the 2016 U.S. presidential election, as well as the "hack-and-leak" attack on the Democratic National Committee in 2016 by a Russian APT and the subsequent disinformation around the Guccifer 2.0 persona.

But in addition to government or political entities, DiResta said nation-state threat actors have targeted companies in industries such as agriculture or energy in order to gain competitive economic advantages or to serve larger geopolitical goals. She cited a 2018 congressional report from the House Committee on Science, Space and Technology that described how Russian state actors used troll accounts to launch reputational attacks on U.S. energy companies over "fracking."

"You can see those same models applied to attack the reputations of businesses online as well," she said. "Companies that take a strong stand on divisive social issues may find also themselves embroiled in social media chatter that isn't necessarily what is seems to be."

Threat actors, for example, may try to "erode social cohesion" by amplifying existing tensions and inflaming those social media conversations. And those murky waters can be tough for enterprises to navigate, she said.

"Very few companies will know where in the org chart to put responsibility for understanding those kinds of activities," DiResta said. "Just because a lot of mentions of your brand are happening, that doesn't necessarily mean they're authentic or inauthentic, so this really kind of falls to the CISO at this point to try to understand when these attacks are focused on corporations, when they should respond, and how they should think about them."

DiResta said such reputational attacks and disinformation campaigns require more from enterprises than just social media analysis. "We need to be doing more red teaming," she said. "We need to be thinking about the social and media ecosystem as a system, proactively envisioning what kinds of manipulation are possible."

Still, DiResta said it's extremely difficult to determine how much a specific disinformation campaign may have affected public opinion, whether it's a political issue or a corporate brand. The Stanford Internet Observatory maps how disinformation spreads and narratives are constructed on media platforms, but she said more works needs to be done on their effects.

"We can see how people are reacting to this stuff but can't really see if it changed hearts and minds," she said.

Black Hat co-founder Jeff Moss agreed more analysis is needed to get a better handle on the problem.

"There's not enough actual research being developed and studied to inform policymakers to tell us what to do about it," he said during his introduction of DiResta.